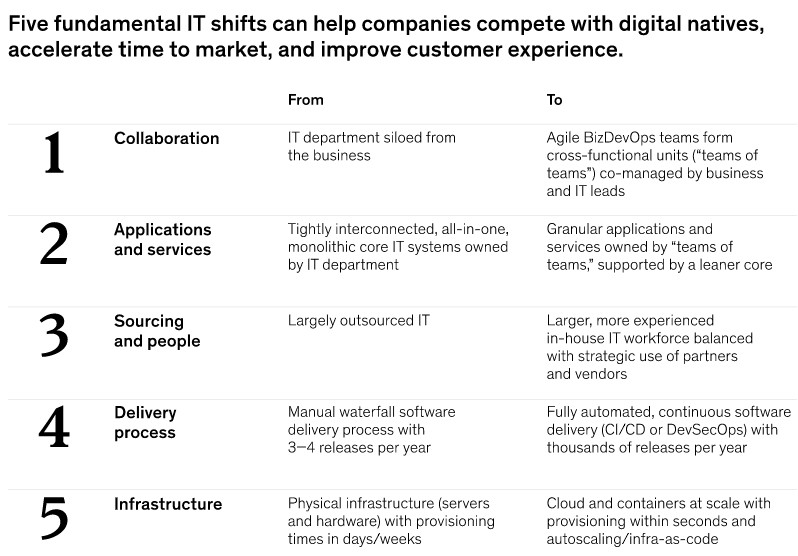

In pursuing an agile transformation, CEOs and CIOs can find common ground in five IT shifts that can enable traditional players to compete with digital disrupters.

Five shifts are required to compete with digital natives: creating truly cross-functional teams with co-leadership of business and IT, decoupling core systems, nurturing engineering talent, automating software delivery, and adopting cloud infrastructure (Exhibit 1). Each of the shifts contributes to enterprise agility through specific business outcomes, addressing the objectives of both the CEO and CIO: speed of delivery, customer experience, quality, productivity, and total cost of ownership.

5 Fundamental IT Shifts

1: Collaboration: From siloed IT department to cross-functional agile teams

A common complaint among CEOs is that the IT department is like a black hole; they see delayed projects and overrun budgets, and it can be a struggle to measure IT productivity. On the flip side, CIOs note that the business often throws an endless string of new requirements over the fence that IT doesn’t have the capacity to deliver, let alone manage the corresponding technical debt. In traditional structures, the process of defining and aligning business and IT requirements can take three to six months before the first line of code is even written.

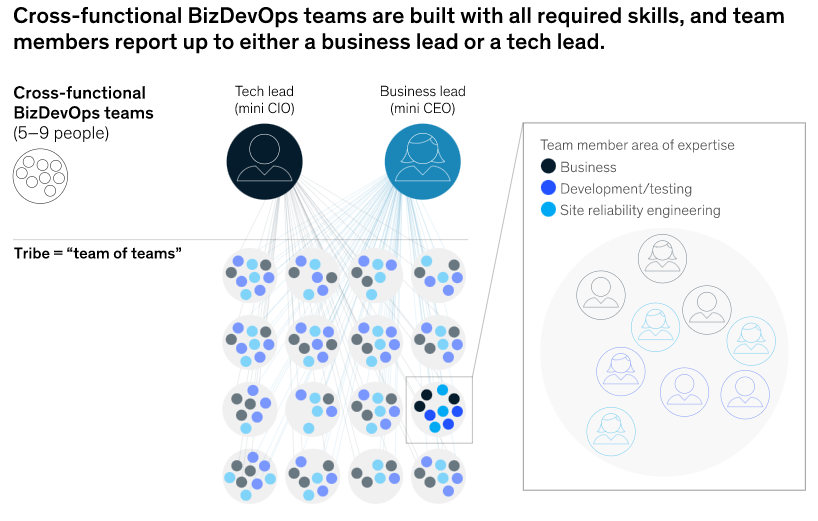

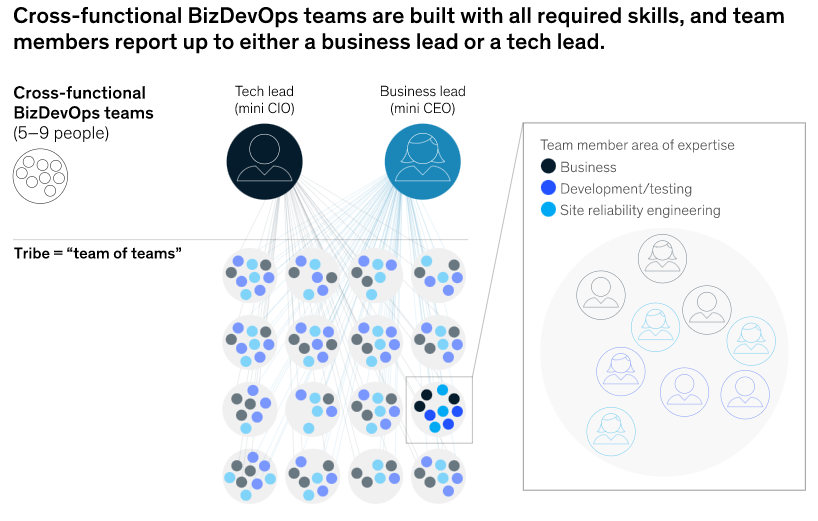

Overcoming this dichotomy requires shifting the collaboration model away from an isolated IT department and toward cross-functional teams that contain a mix of business-line and IT professionals. By achieving missions with as few handovers as possible, these teams are crucial to increasing the speed of development, launch, and feedback integration. At the core of this model are “BizDevOps” teams of five to nine people that have all the required skills to deliver a mission: business, developing and testing, and site reliability engineering (Exhibit 2). Business team members include product owners, product experts, and customer experience experts who drive product needs based on the voice of the customer and ROI. Engineers drive the production of shippable software on a daily basis, as well as automation to release and operate reliably in production. Daily interaction allows the team to reduce requirements alignment time from months to days or even hours, radically reducing time to market and the need for communicating through the bureaucracy.

Overcoming this dichotomy requires shifting the collaboration model away from an isolated IT department and toward cross-functional teams that contain a mix of business-line and IT professionals. By achieving missions with as few handovers as possible, these teams are crucial to increasing the speed of development, launch, and feedback integration. At the core of this model are “BizDevOps” teams of five to nine people that have all the required skills to deliver a mission: business, developing and testing, and site reliability engineering (Exhibit 2). Business team members include product owners, product experts, and customer experience experts who drive product needs based on the voice of the customer and ROI. Engineers drive the production of shippable software on a daily basis, as well as automation to release and operate reliably in production. Daily interaction allows the team to reduce requirements alignment time from months to days or even hours, radically reducing time to market and the need for communicating through the bureaucracy.

In practice, these BizDevOps teams work in parallel to support different areas of the business. Take examples of multiple European and Asian banks and telecom operators that have established a large number of these teams that ladder up into “teams of teams” known as “tribes.” In these companies, segment tribes bundle products for specific business segments and support commercial activities, while product tribes develop product features and product-specific customer journeys. To counterbalance the autonomy of the segment and product tribes and to preserve architectural consistency and IT cost efficiency, companies also establish platform tribes that deliver common services, providing reusable components to facilitate the work of engineers in business tribes. Examples include cybersecurity-as-a-service, infrastructure-as-a-service, and data-as-a-service tribes that provide automated self-service tools, as well as core IT tribes that hold complex legacy systems that span multiple tribes and can’t (yet) be distributed.

In many cases, the tribes absorb the entire IT staff and take ownership of IT systems, and the traditional IT department ceases to exist. However, the need—and responsibility of the CIO—remains to supervise technical debt and the technical quality of delivery and uptime, as well as attract and develop IT talent. To achieve a balance, companies can ensure each tribe has both a business lead (“mini CEO”) and an IT lead (“mini CIO”). Often, the business-tribe leads report to the head of business (typically an executive committee member such as the chief commercial officer), and the IT leads report to the CIO, ensuring a level of control and accountability by the CIO.

2: Applications and services

From a monolith IT core to granular applications and services isolated by APIs and owned by teams of teams

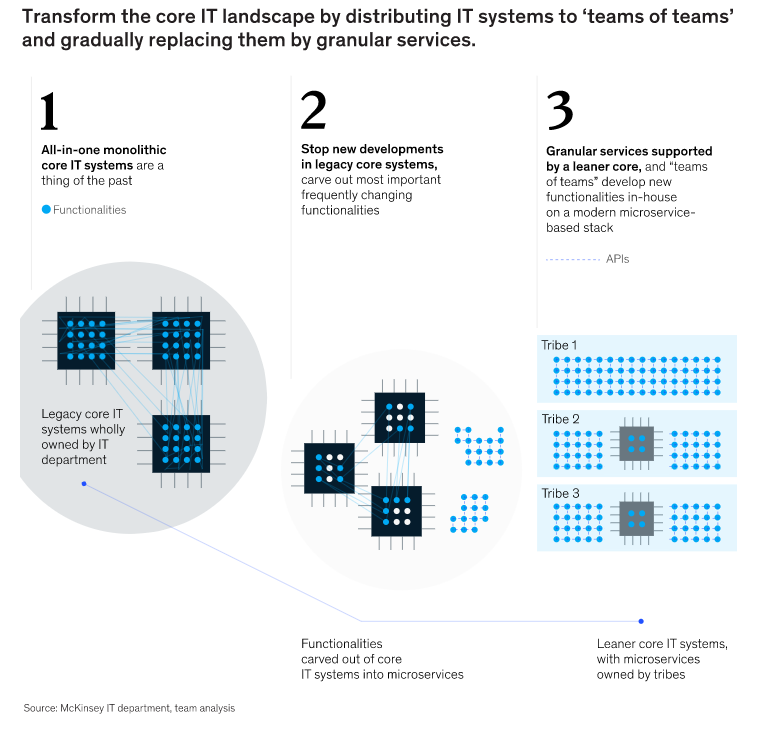

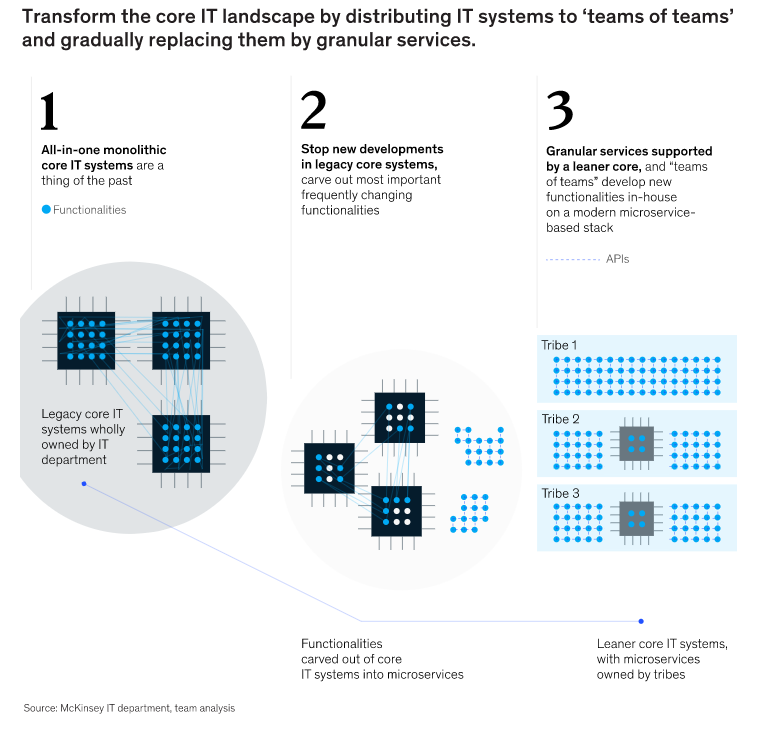

Monolithic, “all-in-one” IT systems are a thing of the past; today’s IT systems need to be granular enough to evolve independently on a daily basis. Traditionally, core systems—such as core banking systems in banks and business support systems in telecom—have been the hub for a multitude of functionalities, all tied inside one application or several interconnected monolith applications with a spaghetti-like array of interconnections. While this structure has some scale and computation-speed advantages, a change in one functionality typically causes the need to perform regression testing on all the other functionalities to make sure nothing is broken. Furthermore, many of the affected systems from the 1990s and 2000s were optimized for brick-and-mortar retail channels or even back-office functions, leaving out mobile, web, partner API, and other digital channels that have appeared since then and increased complexity. For example, at one bank, changing a tariff or creating a new product required changes in up to 30 systems, which led to parallel developments in several departments and weeks of regression testing to find flaws in each of them.

Monolithic, “all-in-one” IT systems are a thing of the past; today’s IT systems need to be granular enough to evolve independently on a daily basis. Traditionally, core systems—such as core banking systems in banks and business support systems in telecom—have been the hub for a multitude of functionalities, all tied inside one application or several interconnected monolith applications with a spaghetti-like array of interconnections. While this structure has some scale and computation-speed advantages, a change in one functionality typically causes the need to perform regression testing on all the other functionalities to make sure nothing is broken. Furthermore, many of the affected systems from the 1990s and 2000s were optimized for brick-and-mortar retail channels or even back-office functions, leaving out mobile, web, partner API, and other digital channels that have appeared since then and increased complexity. For example, at one bank, changing a tariff or creating a new product required changes in up to 30 systems, which led to parallel developments in several departments and weeks of regression testing to find flaws in each of them.

Replacements of such core systems have always been associated with a significant cost—to the tune of €50 million to more than €500 million over a multiyear program. But that’s not necessarily the best path forward for all companies.

Instead, the pressure to deliver great customer experiences while spending money wisely has led a number of agile companies to adopt the “Strangler pattern.” This approach involves selecting the most frequently changing functionalities (such as loan-origination journeys, product catalogs or tariff modules, scoring engines, data models, or customer-facing journeys), assigning ownership for these functionalities to business or platform tribes, and setting up dedicated BizDevOps teams to create granular and specialized services (often called microservices). These services follow a “one service–one function” principle, carving out what doesn’t belong in legacy systems and leaving a leaner core (Exhibit 3). At its base, this approach cuts down on the time to develop and revise functionalities, reducing the total cost of ownership.

Two banks demonstrate the potential. While primary banking system functionalities (such as a general ledger) should and will remain within the core, one bank was able to make its monolith core banking systems leaner by approximately 35 percent by separating noncore functions into a microservice layer or specialized applications (for example, a pricing engine or collections service), enabling frequent changes to independent microservices or modules. Another bank applied the approach selectively on a limited number of journeys (including onboarding and cross-selling), carved out frequently changing functionalities required to support these journeys from legacy systems into independent microservices, and was able to shorten the time to market for features touching these functionalities from months to hours.

3: Sourcing and people: From outsourcing IT to strategic IT hiring balanced with partners and vendors

Many large, incumbent companies outsource a huge amount of their IT—if not all of it—partly for cost reasons, and partly due to a struggle to lure the right talent away from more compelling disrupters and digital natives. It’s little wonder, then, that vendors are proliferating and offering a new range of opportunities to help CIOs circumvent their talent-sourcing challenge.

However, companies that embrace enterprise agility cannot lean too hard on vendors and partners to provide turnkey IT services. In this world, the paradigm of fully outsourcing IT to a vendor and submitting requests for a needed change is slow and no longer suitable due to rapidly shifting needs. Competing with digital natives requires daily testing of minimum viable products (MVPs) and leaves little room for handovers—be it between departments within a company or with outside vendors. Furthermore, it requires continual renewal of technologies as development frameworks, libraries, and patterns evolve every year. One CEO was shocked when he learned that there were only about 100 people in the entire job market whose resumes indicated they were capable of working with its legacy, vendor-based core system, compared with thousands who could work with open-source technologies. These needs are much easier to meet with internal talent embedded in core teams.

To address its talent needs, one European bank completely revamped its IT workforce by identifying the engineers who were actually coding and radically increasing the share of coders from around 10 percent to 80 percent. It also mapped all engineers on a capability scale (from novice to expert, on a scale of 1 to 5) and focused on creating a diamond-shaped talent composition. This configuration increased the share of its IT workforce that qualifies as expert or advanced engineers (the middle of the diamond) and who are exponentially more productive but not exponentially more expensive than less experienced engineers. The result was a total refresh of its approximately 2,000 full-time-equivalent (FTE) IT workforce, with the experienced engineer population making up 80 percent of the head count and achieving cost savings of 40 percent. An international telecom company internalized hundreds of engineers, mostly by insourcing but also by offering individual engineers or even vendor companies time-and-material B2B contracts. Another international bank internalized several thousand engineers, built a talent ecosystem with vendors and cloud providers to attract younger and scarcer talent, and launched reskilling initiatives to upskill more than 6,000 developers and architects.

Vendors, for their part, are also adapting and finding new ways to cooperate. On one hand, they offer more API-accessible software-as-a-service (SaaS) and platform-as-a-service (PaaS) solutions that offer specific turnkey functionalities that can be consumed off the shelf. On the other hand, companies provide highly specialized and experienced talent on demand—such as through strategic partnerships—offering alternatives to the typical vendor bundle, which includes novice employees.

4: Delivery process: From waterfall processes to continuous delivery

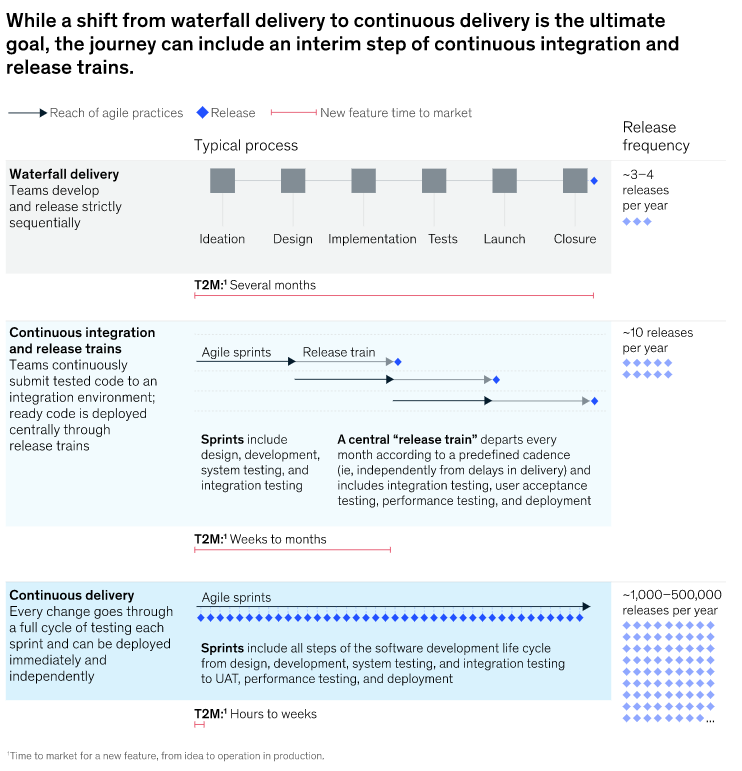

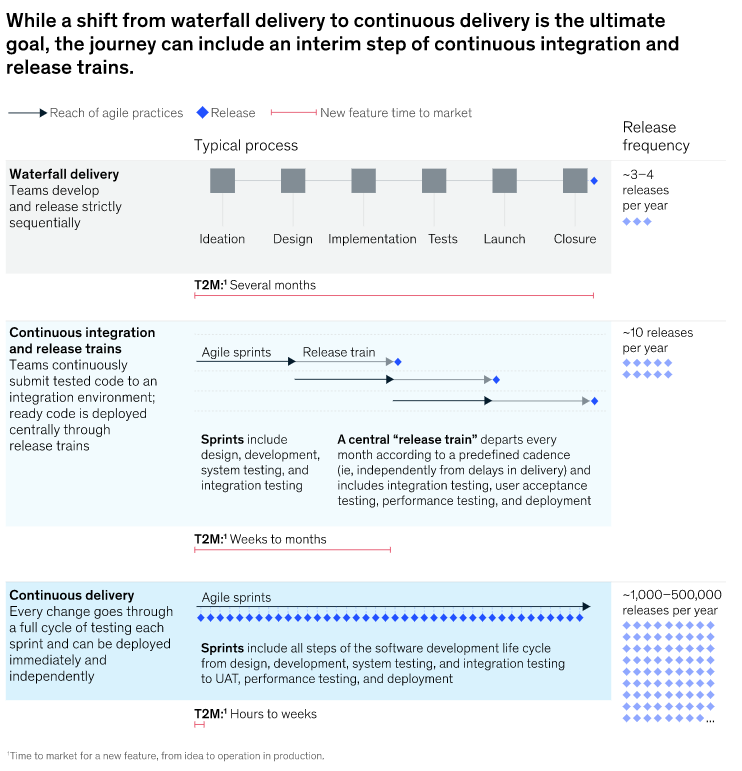

The speed of delivery is a constant source of debate between CEOs and CIOs; CEOs tend to become frustrated by the time that it takes a traditional organization to get through all the steps of waterfall delivery, but CIOs caution that going faster can lead to production incidents. While an average company is able to release three to four major upgrades of functionality per year and faster ones reach ten to 12, digital-native companies such as Amazon, Google, and most digital start-ups can release at virtually any time as needed—weekly, daily, or hourly. This allows digital natives to A/B test different versions of the same functionality with different clients, test MVPs any time, incorporate customer feedback at pace, and continually evolve the business, reaching a true level of agility. Several traditional banks and telecoms in Europe and Asia followed this path and reach as many as 20,000 releases per quarter, even on back-end systems.

The speed of delivery is a constant source of debate between CEOs and CIOs; CEOs tend to become frustrated by the time that it takes a traditional organization to get through all the steps of waterfall delivery, but CIOs caution that going faster can lead to production incidents. While an average company is able to release three to four major upgrades of functionality per year and faster ones reach ten to 12, digital-native companies such as Amazon, Google, and most digital start-ups can release at virtually any time as needed—weekly, daily, or hourly. This allows digital natives to A/B test different versions of the same functionality with different clients, test MVPs any time, incorporate customer feedback at pace, and continually evolve the business, reaching a true level of agility. Several traditional banks and telecoms in Europe and Asia followed this path and reach as many as 20,000 releases per quarter, even on back-end systems.

Speeding up delivery does not require a trade-off with quality. Getting competent engineers working on autonomous microservices unlocks the true power of continuous integration and continuous delivery (CI/CD). The secret to making this shift lies in automating tasks to enable frequent incremental releases (Exhibit 4). And all stages of delivering a service, from coding to testing to deployment, are automated, including security testing in the DevOps pipelines—what is commonly referred to as DevSecOps. An advanced international bank went a step further and created an internal platform as a service for developers. Each developer could access templates of services through a global portal and automatically access required infrastructure, CI/CD pipeline, security tooling, and API definition with a click of a button. This enabled developers to focus on coding actual business functionalities rather than wasting time on setting up pipelines and infrastructure configurations.

Because not all the systems will be ready from day one, some companies will need to adopt a differentiated approach, allowing API-ready systems to release at any time and adopting release trains for systems that require regression testing, while investing in their automation. This automation and acceleration of the delivery process is typically driven by special delivery-platform-as-a-service units that support all “teams of teams” in a company in adopting engineering practices by setting up and maintaining tools such as CI/CD pipelines.

5: Infrastructure: From physical infrastructure to cloud, containers, and infrastructure as code

Finally, no discussion of deploying technology in an agile enterprise would be complete without mentioning cloud infrastructure—public, private, or hybrid. Similar to automation, cloud infrastructure allows companies to obtain computing and storage capacity on demand, skipping bureaucratic procedures and spending seconds provisioning an environment instead of waiting for weeks. As our previous research has shown, nearly 80 percent of enterprises have already been planning to put at least 10 percent of their workloads in the public cloud over the next three years.

Several banks and telecoms in Europe and Russia have moved their production and testing loads to cloud providers. For example, one Western European bank uses the cloud’s flexible capacity for hosting testing environments, an Eastern European bank uses it to host testing and production environments for selected apps and customer journeys in compliance with federal laws, and a European telecom has its entire API layer in the cloud. Most advanced companies use the infrastructure-as-code concept to obtain capacity through an API, directly requesting additional environments from the software rather than through physical hardware configurations. An IT infrastructure tribe is typically responsible for this.

When deployed alongside CI/CD, cloud infrastructure has proven to radically improve several key IT metrics—mostly by eliminating wait time and reworking as well as demand forecasts. For example, companies have been able to compress cycle time by implementing standardized processes and automation and accelerate software deployments and testing. Some teams that used to spend two days per sprint on regression testing can now perform the same task in just two hours. In addition to improving productivity, companies can also significantly reduce IT overhead costs by optimizing IT asset usage as well as improving the overall flexibility of IT in meeting business needs. Indeed, cloud providers are increasingly offering much more sophisticated solutions than basic computing and storage, such as big data and machine-learning services. Cloud infrastructure and CI/CD have also been seen to accelerate time to market and increase the quality of service through the “self-healing” nature of standard solutions—for example, automatically allocating more storage to a database approaching capacity.

Overcoming this dichotomy requires shifting the collaboration model away from an isolated IT department and toward cross-functional teams that contain a mix of business-line and IT professionals. By achieving missions with as few handovers as possible, these

Overcoming this dichotomy requires shifting the collaboration model away from an isolated IT department and toward cross-functional teams that contain a mix of business-line and IT professionals. By achieving missions with as few handovers as possible, these  Monolithic, “all-in-one” IT systems are a thing of the past; today’s IT systems need to be granular enough to evolve independently on a daily basis. Traditionally, core systems—such as

Monolithic, “all-in-one” IT systems are a thing of the past; today’s IT systems need to be granular enough to evolve independently on a daily basis. Traditionally, core systems—such as  The speed of delivery is a constant source of debate between CEOs and CIOs; CEOs tend to become frustrated by the time that it takes a traditional organization to get through all the steps of waterfall delivery, but CIOs caution that going faster can lead to production incidents. While an average company is able to release three to four major upgrades of functionality per year and faster ones reach ten to 12, digital-native companies such as Amazon, Google, and most digital start-ups can release at virtually any time as needed—weekly, daily, or hourly. This allows digital natives to A/B test different versions of the same functionality with different clients, test MVPs any time, incorporate customer feedback at pace, and continually evolve the business, reaching a true level of agility. Several traditional banks and telecoms in Europe and Asia followed this path and reach as many as 20,000 releases per quarter, even on back-end systems.

The speed of delivery is a constant source of debate between CEOs and CIOs; CEOs tend to become frustrated by the time that it takes a traditional organization to get through all the steps of waterfall delivery, but CIOs caution that going faster can lead to production incidents. While an average company is able to release three to four major upgrades of functionality per year and faster ones reach ten to 12, digital-native companies such as Amazon, Google, and most digital start-ups can release at virtually any time as needed—weekly, daily, or hourly. This allows digital natives to A/B test different versions of the same functionality with different clients, test MVPs any time, incorporate customer feedback at pace, and continually evolve the business, reaching a true level of agility. Several traditional banks and telecoms in Europe and Asia followed this path and reach as many as 20,000 releases per quarter, even on back-end systems.